Satisfaction surveys are essential tools for evaluating the effectiveness of your e-Learning courses and collecting customer feedback on your educational offer. But did you know that most questionnaires fail to measure the real impact on the skills of employees? Learn how to create a satisfaction survey that goes far beyond simply measuring satisfaction levels.

Why traditional satisfaction surveys fail to measure the real impact of training

Traditional satisfaction surveys have several fundamental limitations. They often focus on learners' immediate feelings rather than on actually learning or applying skills in real working time.

Standardized scoring scales (1 to 5) induce confirmation bias. Respondents tend to give positive answers by default, which skews your results and does not allow you to identify concrete problems.

The gap between the satisfaction expressed and the real impact is substantial in the customer training experience. Modules that are considered “pleasant” are not necessarily the ones that transform the professional practices of your team.

The scientific foundations of an effective satisfaction questionnaire

Cognitive science provides a rigorous framework for developing truly relevant questionnaires. Your goal is not flattering customer reviews, but actionable data to improve your service.

- Principle of cognitive projection : Formulate choice questions that project the employee into concrete application situations

- Mental effort measure : Evaluate the cognitive load experienced during learning (not too low or excessive)

- Calibrated self-assessment : Ask employees to assess their confidence in applying the knowledge they have gained

- Retention assessment : Measure the perception of medium-term memory rather than immediate comprehension

The fundamental difference between measuring customer satisfaction and evaluating impact lies in timeliness. Satisfaction is immediate while the impact is measured in the ability to mobilize long-term knowledge in the internal customer journey.

Are you a company or a training organization?

The 4 essential dimensions to measure in your questionnaire

To get a complete picture of the effectiveness of your training, your form should cover four complementary dimensions that will help you better understand the expectations of your customers.

Each dimension brings a piece to the assessment puzzle, allowing you to identify exactly where your training product excels and where it can improve.

16 essential questions to include in your questionnaire (with examples)

Profile questions and context

- What is your field of activity in the company? (offer multiple choices adapted to your market)

- Are you a team manager? (yes/no)

- What was your level of knowledge of the subject before starting this training? (scale from “I discover all the concepts” to “I already have expertise”)

- What is your main motivation for taking this course? (personal development, professional needs, specific interests...)

Satisfaction questions

- Would you recommend this course to a colleague? (scale of 0 to 10 - Net Promoter Score)

- On a scale of 1 to 6, how much do you agree with the statement, “I was bored doing this online training”?

- On a scale of 1 to 6, how much do you agree with the statement: “Compared to the other online courses I've been able to do, I found this experience enjoyable”?

- What memories do you have of the training? (several possible answers to check: too easy, too difficult, boring, fun...)

Perceived value assessment questions

- On a scale of 1 to 6, how much do you agree with the statement: “I think I have memorized the training content well”?

- On a scale of 1 to 6, how much do you agree with the statement: “This training provided me with useful elements for my professional practice”?

- On a scale of 1 to 6, how satisfied are you with what you have learned and do you feel able to apply it in your daily life?

- This journey in its entirety allowed you to...? (multiple choice question: discover new concepts, review known information, improve my knowledge...)

Questions to measure real impact

- What are the 3 key elements that you remember from this training? (open question to gather qualitative feedback)

- What concrete actions are you going to implement in the next 7 days following this training? (open question)

- To what extent will this training change your professional approach in the coming months? (scale from 1 to 6)

- Would you be interested in exploring certain themes in greater depth? If yes, which ones? (open question)

These questions provide a solid foundation for getting actionable feedback. Adapt them to your specific context to maximize their relevance and facilitate your decision-making.

How to structure your questionnaire to maximize actionable responses

The structure of your satisfaction survey directly influences the quality and number of responses obtained. A thoughtful layout promotes the commitment and sincerity of the interviewed participants.

Pay particular attention to your introduction. Clearly explain the goals of the questionnaire and what you will do with the data collected. Also specify how long it takes to respond, ideally less than 5 minutes.

Expert advice

- Start with simple questions to engage the interviewee

- Switch between closed-ended questions (for quantitative data) and open-ended questions (for qualitative insights)

- Limit the total number of questions to a maximum of 10-15 to avoid abandonment

- Choose even scales (1-6) that require you to take a stand rather than remain neutral

- Vary the formulations to maintain the respondent's attention

- End with an open-ended question to get free feedback

The order of the questions ideally follows a logical progression: profile, general satisfaction, perceived value, then impact. This sequence allows the learner to progressively engage in a deeper reflection on their customer training experience.

Integrating and distributing the questionnaire into your learning journey

The strategic positioning of your online survey in the learning journey is crucial for obtaining relevant feedback and a good response rate.

- Choose the optimal time : place your questionnaire at the end of the last module that the learners will complete

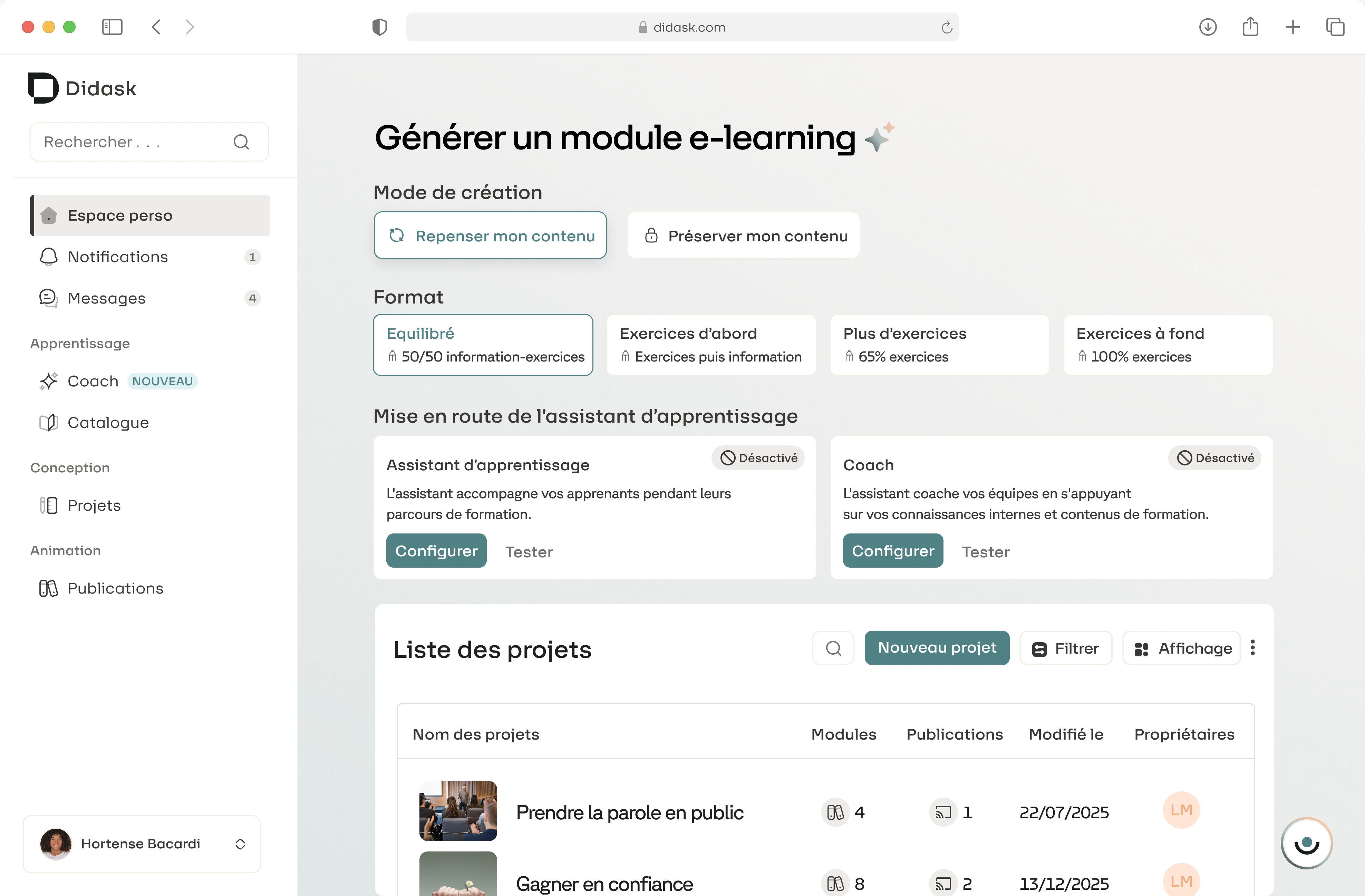

- Use Free Mode in Didask to easily integrate tools like Google Forms, SurveyMonkey, Typeform

- Create your form in the external tool of your choice with an intuitive interface

- Embed the link or iframe in a Didask information granule for a smooth experience

- Communicate clearly on the importance of the questionnaire for respondents via email or social networks

The timing of distribution influences the nature of the returns. An immediate questionnaire after training better measures satisfaction on the spot, while a deferred questionnaire (7-30 days after) more effectively assesses the real impact on professional practices.

For long courses, consider several measurement points: halfway through to adjust if necessary, at the end for overall satisfaction, and a few weeks later for the transformational impact.

Are you a company or a training organization?

From data to improvement: manually analyze the results

Analyzing the data collected requires a methodical approach to transform these insights into concrete improvements in your training product or service.

For quantitative analysis (scales, multiple choices), calculate the means and identify the distribution of responses. In particular, look for significant differences that indicate significant points of satisfaction or dissatisfaction in your internal market research.

For qualitative analysis (open questions), use a structured methodology:

- Identify recurring themes in customer reviews

- Classify the verbatims according to these criteria

- Quantify the frequency of occurrence of each theme

- Identify concrete improvement suggestions for your offer

Then cross this data with the initial objectives of your training. The gap between educational intent and learners' perception reveals the priority areas for improvement for your next content.

The manual analysis of this data is certainly time-consuming but remains the most reliable method for extracting relevant insights for your continuous improvement process.

How to complete your questionnaires with Didask's objective indicators

Satisfaction surveys provide valuable declarative data, but supplementing them with objective indicators greatly enriches your analysis report.

La Didask training platform provides you with several key indicators in your trainer dashboard:

- Completion rate : percentage of learners who have completed each module

- Time spent : average time spent on each activity

- Success rate : learners' performance in exercises and quizzes

- Blocking points : identification of activities where learners encounter difficulties

For a holistic analysis, combine these objective data with the subjective feedback from your questionnaires:

- Identify the modules with the lowest satisfaction rates

- Examine their corresponding learning statistics

- Determine if the challenges are related to content, design, or complexity

- Prioritize your improvement actions according to the expected impact for your employees

This cross-approach allows you to distinguish subjective perceptions from objective problems, for truly relevant adjustments to your training system.

Towards a cycle of continuous improvement of your training

Satisfaction surveys, when designed with a scientific approach, become powerful levers for transforming your e-learning courses and a significant competitive advantage.

By going beyond simply measuring satisfaction to assess the real impact on skills, you gain valuable insights to continuously optimize your learning paths and better meet the needs of your market.

The combination of subjective data from the questionnaires and the objective indicators available in Didask offers you a 360° vision ofeffectiveness of your training, without requiring a premium subscription or expensive advanced features.

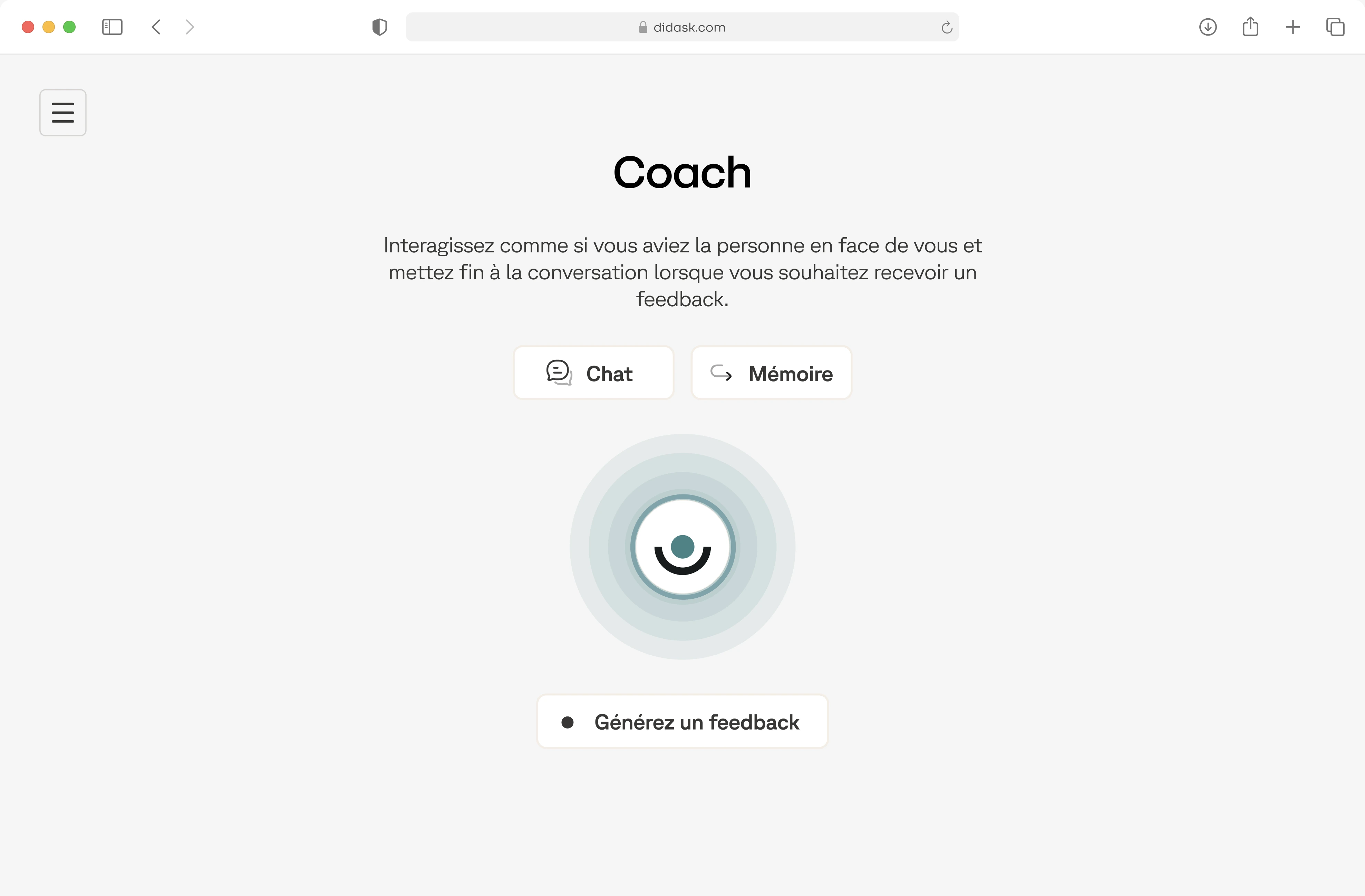

Ready to transform your questionnaires into real strategic tools? Discover how the Didask platform, based on cognitive science, can help you maximize the impact of your e-Learning courses and improve the satisfaction of your employees.

.png)