Very few technologies have seen as rapid and massive a breakthrough as Generative AIs. Today, ChatGPT is used by hundreds of millions of people. However, the real revolution is operating in the background. Answering, summarizing, translating, the potential of Large Language Models goes much further than these tasks qualified as “simple” in 2024.

Together, we are going to see a complex application of generative AIs to solve a dilemma as old as the world.

The expert or the professor

The challenge of pedagogy is the transmission of knowledge from the expert to the learner. However, a highly qualified expert in his field will not necessarily be able to transmit his knowledge effectively. Because this is the challenge of the teacher, who is himself an expert in a field: pedagogy

However, it is difficult to ask the expert to become a professor and the professor to become an expert. This is where the educational engineer comes in, capable of understanding and organizing the raw knowledge of an expert to maximize the transmission of knowledge.

Educational engineer at Prompt Engineer

It is possible to think of an LLM as an assistant who will be given instructions to perform specific tasks. Thus, an educational engineer will be able to give instructions to an LLM in order to extract and organize the raw knowledge of an expert.

These simplified instructions can be things like extracting key messages from content (segmentation), suggesting a common mistake made by learners (re-formulation), or creating a debate around a concept (scenario). In addition to teaching instructions, a variety of techniques are used to control the LLM.

This new field of research that emerged at the same time as the LLMs is called “Prompt Engineering” and here are a few examples.

Chain of Thoughts

This method offers complex reasoning skills through intermediate reasoning steps.

For example, first of all, it is a question of asking the LLM to imagine what the profile of the learner is based on the training chosen and external information, then of generating the educational content in line with this profile. This method is explained by the emphasis on the lexical field of the result that one wishes to obtain in the generation. By showing the way to LLM, it reinforces the likelihood that generational tokens will follow this path.

Emotional Stimuli

The quality of generating an LLM is better when emotional messages are included in the prompt.

For example, by indicating that a task is very important and that its success will be highly appreciated, the generation will be of better quality.

Generation bait

It is very difficult to ask an LLM not to do something. Indeed, if we explicitly indicate that we do not want something to appear in the generation, then this will strengthen the lexical field of this thing and give it more probability of appearing in the generation. To counter this, the Prompt Engineer will ask the LLM to generate this thing separately so that it can then be manually removed from the final response.

Typically, if one wants to be sure that the LLM does not give clues to a question, then one can ask him to start by writing down all the possible clues in one question and then another question that does not contain any clues.

Those who whisper in the ears of LLMs

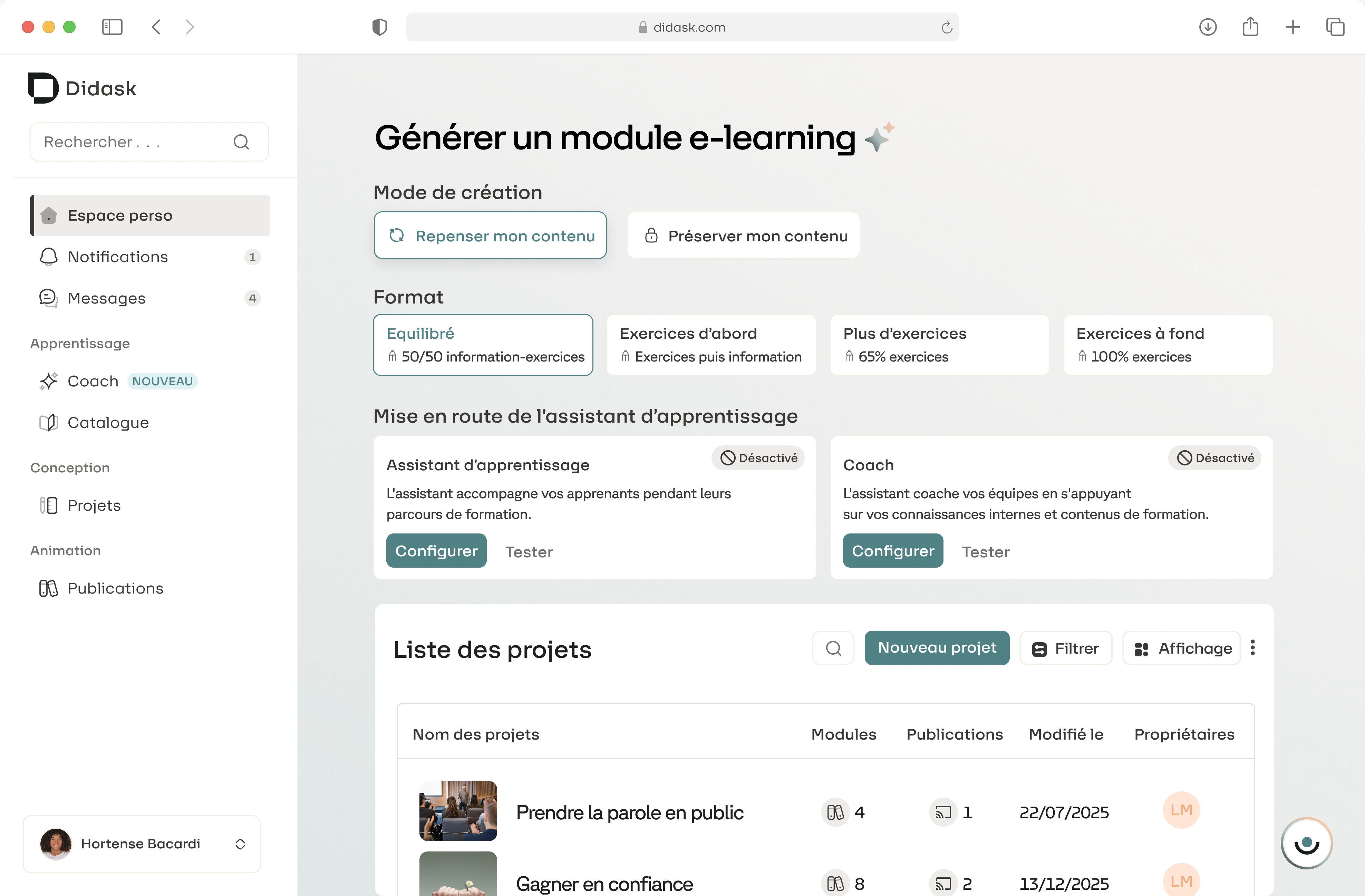

Armed with his pedagogical knowledge and his skills as a Prompt Engineer, the educational engineer is able to create a “copy” of his work processes and automate them. In our market, we are the first to have made this bet. Our Educational AI is now composed of more than 30 cascading instruction sets (prompts) according to the various educational objectives.

Didask Educational AI has already helped hundreds of businesses create more than 3,000 hours of training for their employees. This AI-assisted content could be created on average 5 times faster than when creating a traditional e-learning course.

And tomorrow?

The race for LLMs is on and the arrival of serious competitors to GPT4 stimulates scientific research and its application in industry. Today, the Claude 3 (Anthropic), Mistral Large Mistral (AI), or Gemini Ultra (Google) models already offer capabilities comparable to GPT4. The next generation of LLMs, which will open with the release of GPT5, should push the reasoning capacity of LLMs even further and make them true semi-autonomous Agents capable of organizing a series of tasks to respond to the problem given to them.

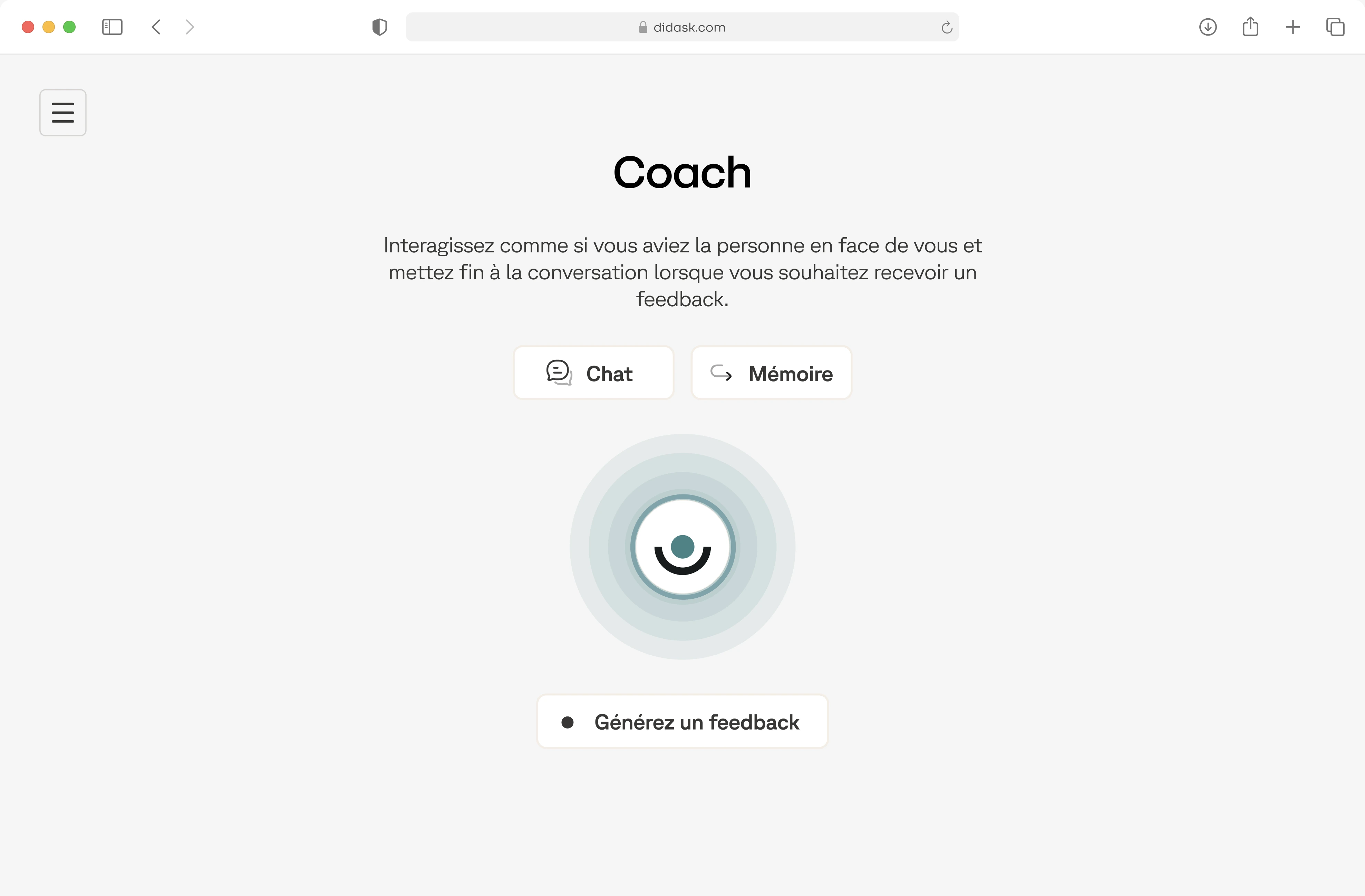

E-learning is just one step for Didask in our mission to improve everyone's skills and productivity. LLMs open doors to go even further and in particular to venture into the field of personalized Adaptive Learning with an AI coach for everyone tomorrow.

Sources to go further: Wei et al. 2022, Li et al. 2023

.png)